CrimeSolutions uses rigorous research to inform you about what works in criminal justice, juvenile justice, and crime victim services. We set a high bar for the scientific quality of the evidence used to assign ratings — the more rigorous a study’s research design (e.g., randomized control trials, quasi-experimental designs), the more compelling the research evidence.[1]

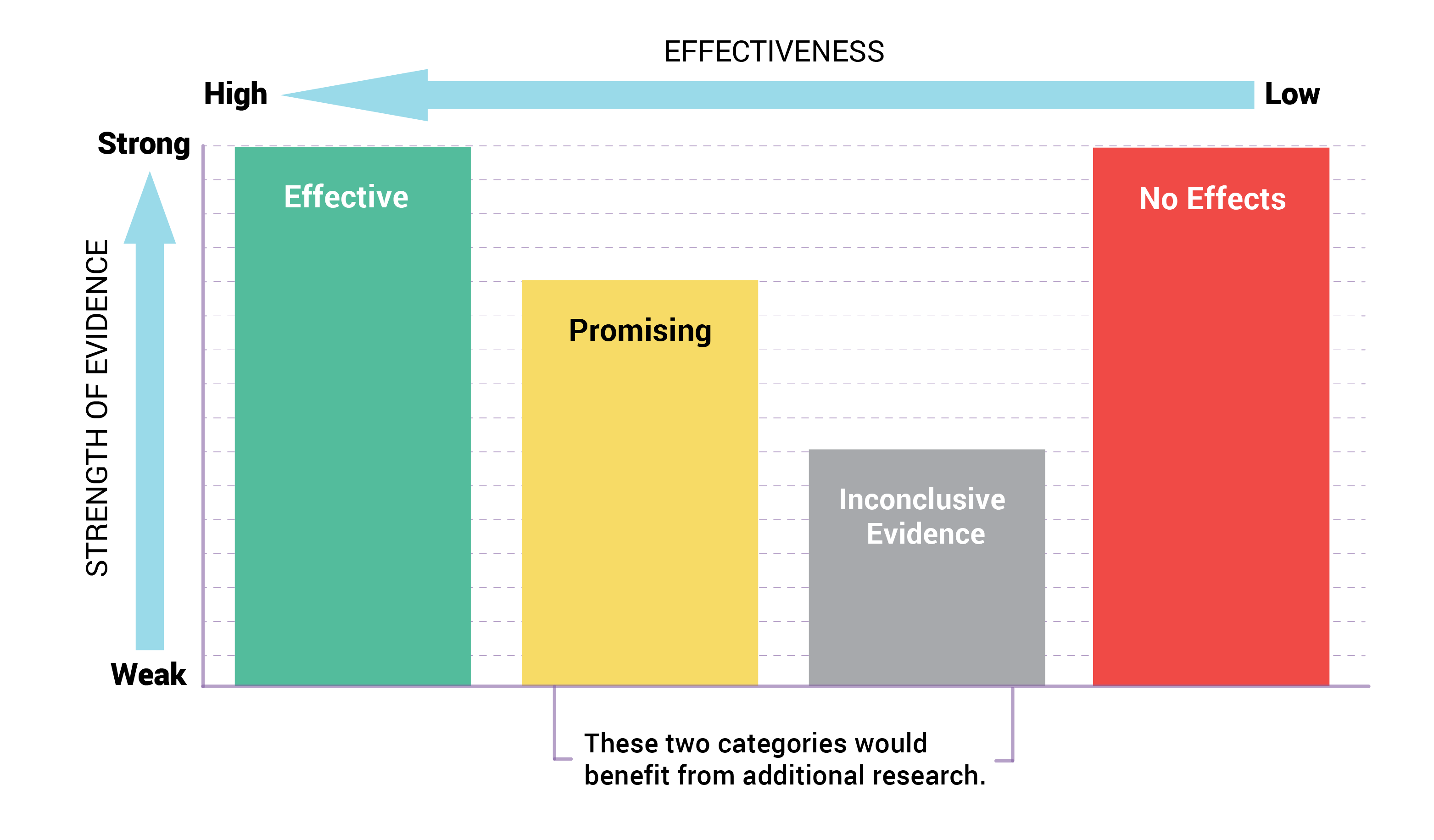

In the CrimeSolutions rating process, we address program and practice evaluations that sit along an evidence continuum with two axes: (1) effectiveness and (2) strength of evidence. See Exhibit 1 below.

Where a program or practice (referred to collectively as interventions) sits on the Effectiveness axis tells us how well it works in achieving criminal justice, juvenile justice, and victim services outcomes. Where it sits on the Strength of Evidence axis tells us how confident we can be of that determination. Effectiveness is determined by the outcomes of an evaluation in relation to the goals of the intervention. Strength of evidence for programs is determined by the rigor and design of the outcome evaluation, and by the number of evaluations. Strength of evidence for practices is determined by the rigor and design of the studies included in the meta-analysis.

On CrimeSolutions, interventions fall into one of four categories, listed below in order of effectiveness from the continuum:

- Rated as Effective: Interventions have strong evidence to indicate they achieve criminal justice, juvenile justice, and victim services outcomes when implemented with fidelity.

- Rated as Promising: Interventions have some evidence to indicate they achieve criminal justice, juvenile justice, and victim services outcomes. Included within the promising category are new, or emerging, interventions for which there is some evidence of effectiveness.

- Inconclusive Evidence: Interventions that made it past the initial review but, during the full review process, were determined to have inconclusive evidence for a rating to be assigned. See Reasons for Rejecting Evaluation Studies. Interventions are not categorized as inconclusive because of identified or specific weaknesses in the interventions themselves. Instead, our reviewers have determined that the available evidence was inconclusive for a rating to be assigned. Review programs reviewed but not assigned a rating or practices reviewed but not assigned a rating.

- Rated as Ineffective: Interventions have strong evidence that the intervention did not have the intended effects when trying to achieve justice-related outcomes. While interventions rated Ineffective may have had some positive effects, the overall rating is based on the preponderance of evidence.

- Rated as Negative Effects: Interventions have strong evidence that the intervention had harmful effects when trying to achieve justice-related outcomes.

In addition to the five categories above, we also maintain a list of interventions for which the available evaluation evidence was not rigorous enough for our review process or that fell outside the scope of CrimeSolutions. Review screened-out program evaluations or screened-out meta-analyses for practices.

For greater details on how an intervention is slotted into one of these five categories, see: