We have identified thousands of programs and practices in our literature reviews, but not every intervention makes it onto CrimeSolutions with a rating.

We use rigorous research to inform you about what works in criminal justice, juvenile justice and crime victim services. We set a high bar for the scientific quality of the evidence used to assign ratings. Much of the evidence that we identify is screened out before going to review or upon review is determined to be inconclusive and we are unable to assign a rating.

On this page find:

- Lists of Interventions Identified but Not Rated

- Reasons for Rejecting Evaluation Studies

- Reasons for Rejecting Meta-Analyses

Lists of Interventions Identified but Not Rated

For both programs and practices, we maintain lists of:

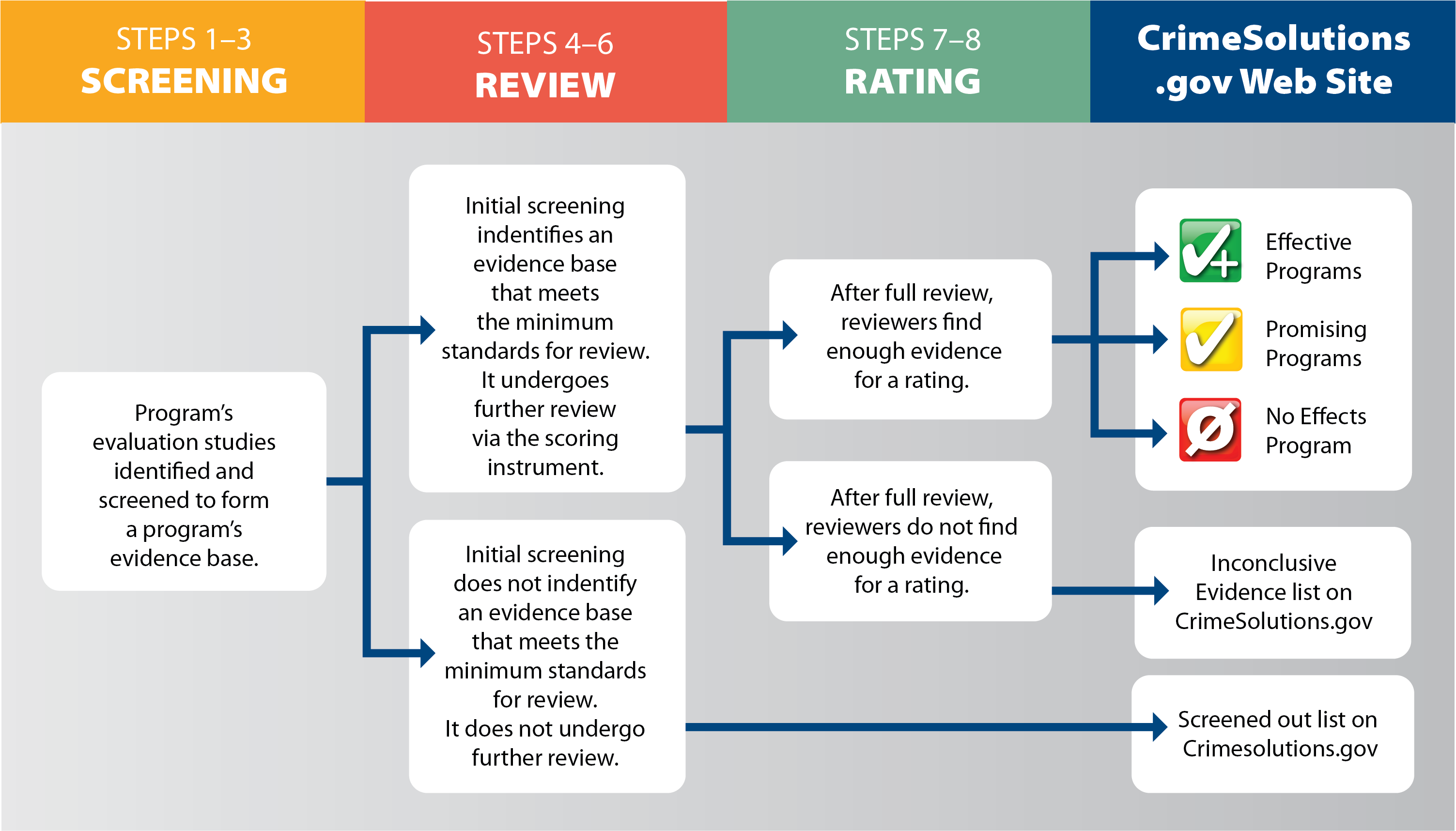

- Program evaluations and meta-analysis (for practices) that were screened out during the initial review. In the figure below, this occurs in Steps 1-3.

- Programs and practices that made it past the initial review but, during the full review process, the evidence was determined to be inconclusive and no rating was assigned. In the figure below, this occurs in Steps 7-8.

Interventions are not placed on these lists because of weaknesses in the interventions themselves. Instead, our reviewers have determined that the available evidence was inconclusive and no rating could be assigned.

We post these lists so that you can see the full breadth of interventions that we have reviewed for CrimeSolutions. This list also demonstrates the need for additional evaluation research and meta-analyis in order to improve the evidence base for these interventions and so that they can be reviewed on CrimeSolutions.

See How We Rate Programs or How We Rate Practices for a complete descriptions of each step.

Reasons for Rejecting Evaluation Studies

- Inadequate Design Quality: studies do not provide enough information or have significant limitation in the study design (for example, small sample size, threats to internal validity, high attrition rates, etc.) such that it is not possible to establish a causal relationship to the justice-related outcomes.

- Limited or Inconsistent Outcome Evidence: studies are well-designed (although there may be some limitations) but showed inconsistent or mixed results such that it is not possible to determine the overall impact of the program on justice-related outcomes.

- Lacked Sufficient Info on Program Fidelity: studies are rigorous and well-designed and generally show no significant effects on justice-related outcomes, but sufficient information was not provided on fidelity or adherence to the program model such that it is not possible to determine if the program was delivered as designed.

Study reviewers also have an override option. This option can be used when the reviewers feel that no confidence can be placed in the results. Reviewers must provide details for their reasoning. Examples of reasons the study reviewers may use the override option include (1) anomalous findings that contradict the intent of the program and suggest the possibility of confounding causal variables, and (2) utilization of inappropriate statistical analysis to examine the outcome data. The override option results in an automatic rejection of a study.

Reasons for Rejecting Meta-Analyses

- Inadequate Design Quality: meta-analyses can be rejected if they do not provide enough information about the design or have significant limitation in the design (for example, did not measure the methodological quality of included studies; did not properly weight the results of the included studies; etc.) such that it is not possible to place confidence in the overall results of the review.

- Low Internal Validity: meta-analyses can be rejected if they have low internal validity, meaning that the overall mean effect size was based on results from included studies with research designs that are not free from threats that could potentially bias the effect estimate. Randomized controlled trials (RCTS) have the strongest inherent internal validity. The internal validity scores of meta-analyses will be lower as the proportion of the included studies with non-RCT designs (i.e., quasi-experimental designs) increases.

- Lacked Sufficient Information on Statistical Significance: meta-analyses may be rigorous and well-designed, but they are rejected if they do not provide sufficient information to determine if the mean effect sizes were statistically significant or non-significant. The statistical significance of a mean effect size is needed to determine an outcome's final evidence rating.